It's not just cute little planes flying around.It's the 100% auditable policies that describe how a swarm collectively interprets information and self-organizes to attack a target. It is interpreting information in a new way. |

|

|

It's using "KEEL Technology" to determine "what to do and how to do it". |

|

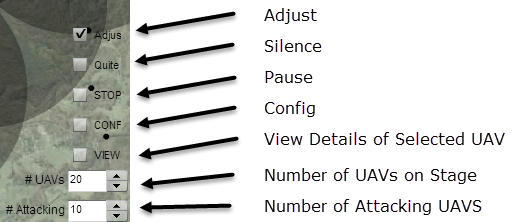

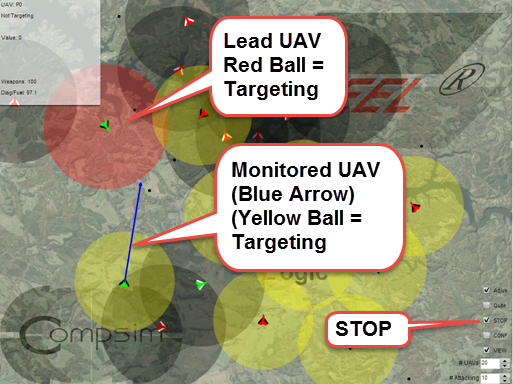

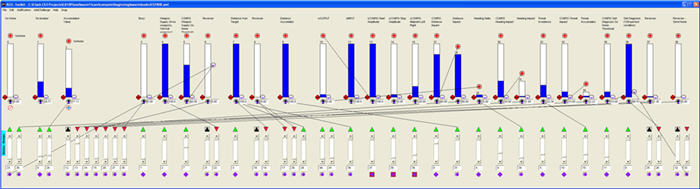

| Aircraft Indicators | |

| White Aircraft Wings | Unassigned (will be in a gray range ball) |

| Black Aircraft Wings | Assigned to Target (Red range ball is the leader; Yellow range ball are followers) |

| Green Aircraft Body | Going directly to planned location. |

| Red Aircraft Body | Collision Avoidance in process |

| Blue Arrow showing heading | Monitored Aircraft (selected by user's mouse) |

| Controls | |

| Adjust | Adjust the number of assigned UAVs after each bombing mission |

| Quiet | Silence the bomb explosions |

| Stop | Pause the action (Config and View still active) |

| Config | Policy Control |

| View | Inputs to KEEL Engine for Monitored Aircraft |

| # UAVs Stepper | Adjust the number of UAVs on the screen |

| # Attackers Stepper | Show / Adjust the number of UAVs to assign to the next bombing run (modified automatically if Adjust checkbox selected) |

In the near future, battles will be fought by groups of autonomous weapon systems directed by policies defined by the humans in control. Today sensors are already controlling how manned aircraft systems perform. The amount of data generated, and the response time required, have long since outpaced the capabilities of human operators. This trend will continue.

The conventional practice of controlling UAVs remotely (i.e., each UAV having its own human operator watch a computer screen and direct the operation with a joy stick that sends control signals directly to a single UAV), will not be sufficient when the UAVs are operating in groups or swarms. These UAVs will be operating in close proximity. Humans make errors because their attention span is limited causing them to lack sufficient situation awareness. Often humans fail to understand the situation in which the units they are controlling are operating. Human short-term memory limitation does not allow them to track a large number of interacting objects. And, many times humans just fail to utilize good judgment. Judgment is more than just following a set of rules. Judgment involves interpreting the importance of many dynamically changing, interactive data items. All of these reasons highlight why humans cannot effectively be the control system for this type of equipment.

For these reasons and more, UAVs operating in swarms or groups must be able to operate with a large degree of autonomy. They must process multiple sensors in parallel and interpret the sensor data according to policies that describe how information is to be processed in the pursuit of goals. Since the UAVs will be operating in an unlimited range of scenarios, it is not possible to "script" how they are to perform in all situations. This means that they must be able to exercise reason and judgment as they pursue their goals.

The UAVs will not be able to rely on humans to confirm their decisions or actions, even if the humans have the ability to process the real-time information available to the UAVs. The UAVs must be able to operate with limited or non-existent data links to ground support organizations, as these links might be used to track and destroy the UAVs themselves.

With small UAVs operating in close proximity, and pursuing collective goals, they must be able react collectively and delegate responsibility effectively. This means that they must all interpret policies the same way.

When defining the behavior of autonomous devices, it is important to differentiate policies and rules. Both are important, but they are different.

Rules: Rules are processed sequentially with conventional IF | THEN | ELSE logic. Rule processing is what computers have been doing since the advent of computers. A human's left-brain processes language with a set of sequential rules.

Policies: Policies describe how to interpret information to address problems. They commonly deal with complex inter-relationships. Policies define how to manage risk and reward situations. In human behavioral terms, policies might describe fight / flight activities. Policies will describe how to choose between different options, or how to allocate resources across several goals. Policies describe how to interpret information and balance inter-related alternatives. The interpretation of policies is an "information fusion" process. In humans, this is a right-brain process where information items are processed in parallel.

A "system" will include both a rule-processing capability AND a policy-processing capability.

This demonstration has 20 UAVs. Each UAV processes "rules" that allow them to fly from where they are to target points that are selected randomly. Rules are processed using conventional computer logic. Each UAV also has 2 KEEL policy processing "engines".

One KEEL engine describes how to avoid collisions by integrating the UAVs heading with the distance and relative position of nearby UAVs. The UAV will adjust its heading based on the position of the other UAV. The magnitude of the adjustment is described as a policy using the KEEL "dynamic graphical language" and implemented as a KEEL engine.

The other KEEL engine processes a policy that performs a self-evaluation to determine its capabilities when deciding whether it is appropriate to attack specific targets. All UAVs process the same information about the target, but relative to their own situation. This information is then shared so each UAV in the swarm understands how its self-analysis compares to the others. The policy objective is that the most appropriate UAVs attack the target.

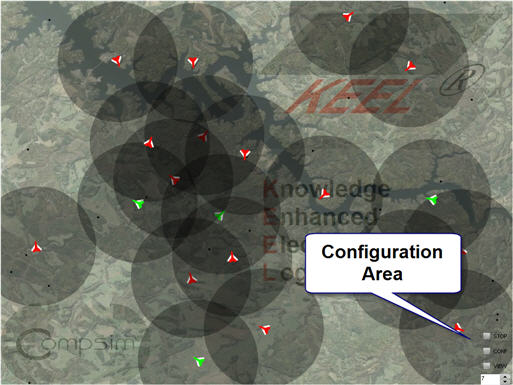

When the demonstration is started, the 20 UAVs are positioned randomly on the battlefield. They fly to random locations indicated by black dots. When they reach their target points they generate a new point to fly toward. All the time they are utilizing their KEEL-based collision avoidance skills to avoid colliding with other UAVs. When they are utilizing KEEL-based collision avoidance, their body turns red. When they are flying directly toward their target point without any obstacles nearby, their body turns green.

The lower right corner of the map contains a "Configuration Area".

At the bottom of this area is a "numeric stepper" that allows the user to select the number of UAVs to be assigned to attack the next target. The user can select a number from 1 to 10 (or a number from 1 to the number of UAVs on the screen, whichever is less). When the demonstration is started, this is set to a 10. and the number of UAVs on the screen is set to 20. This value is used to set the impact of a target for the UAV swarm.

The second "numeric stepper" (up from the bottom) allows the user to select the number of UAVs to be displayed on the screen. It is set to 20 when the demonstration is loaded.

When the user clicks on the map (a location on the map not occupied by one of the UAVs as designated by the circle around them), a new enemy target is created. The numeric stepper at the bottom indicates the number of UAVs that should be used to attack that target. This means that if the numeric stepper is set to 10 (as shown above), and the user clicks on the screen, then 10 UAVs will attack that point. When UAVs have been assigned to attack a target, their wings turn black. When they are not attacking a specific target, their wings are white.

Each UAV utilizes its KEEL engine to perform a self evaluation relative to the new target. The UAVs broadcast their self evaluation value and (using the same policy) decide who should take the lead and who should provide support, when attacking the target. The UAV assuming leadership will be identified on the map with a red range identifier. The UAVs assuming support roles will be identified on the map with a yellow range identifier.

When the user clicks on the screen, all of the UAVs self-evaluate their ability to attack that specific target. The UAVs with the highest rating will attack the target. If a UAV is in the process of attacking one target, it will not divert to a new target.

Each time the user clicks on the screen, the numeric stepper is automatically assigned a new value between 1 and 10. The user can manually adjust this value. The next time the user clicks on the screen this number of UAVs will attempt to self-assign. Only if the UAVs are available, will they self-assign. The UAVs with the grey range identifiers are available for assignment.

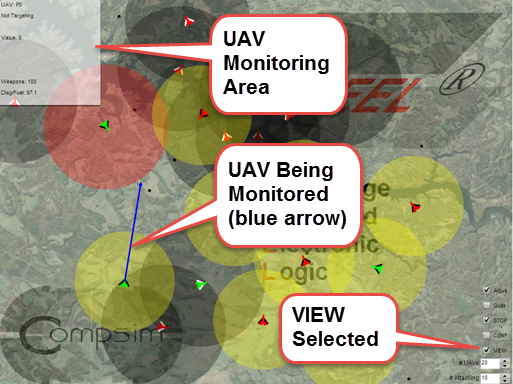

A VIEW checkbox is located above the numeric stepper. Checking this box opens a window in the upper right corner that displays how one of the UAVs is evaluating its situation for attacking a target.

The UAV being monitored is shown with a blue arrow extending ahead of it. You can change the UAV that is being monitored by clicking on another UAV.

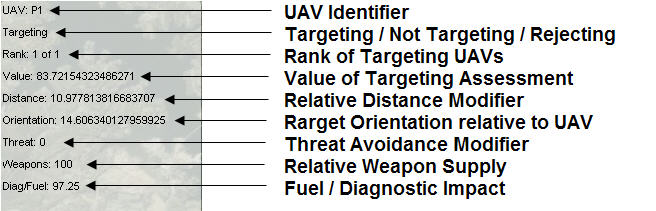

Each UAV has its own name. In this case they are named P1 through P20.

The second line of the monitoring window describes its state: It is Targeting (UAV wings are black) if it is traveling toward a specific target location (where the user clicked on the screen). It is "Not Targeting" (UAV wings are white) if it is randomly flying to new points without the user identifying a specific target. It will show "Rejected Assignment" if no UAVs are available to attack the target (according to the KEEL policy). This case exists, because thresholds are established with the KEEL policy, which require a level of weapons to be available and require a satisfactory self-diagnosis status (including fuel) in order to be assigned a target. If there are available UAVs to pursue the target (other than the UAV being monitored), then the monitored UAV will show "Not Targeting". If no UAVs are available, then the monitored UAV will show "Rejected Assignment".

The third line indicates the rank in the attacking order. The image above shows that there was only 1 UAV assigned to attack a target and this UAV is the leader (number 1 rank) or 1 of 1.

The Value of the UAV assessment (as performed by the KEEL Engine) is shown on the next line.

The Distance line shows the normalized distance number. The distance number is a number indicating the relative distance to the target. It used as an input to the KEEL Engine when determining how each UAV ranks in the targeting assessment. The Distance number of 10.97 indicates the UAV is close to the target. A larger number would indicate it is farther away.

The Orientation line shows the relative heading of the UAV toward the target selected by the user. The 14.6 value shown above indicates the UAV was heading slightly away from the target when it was identified. A larger number would indicate it is heading away from the target. A value of 100 would indicate the UAV is heading directly away from the target.

The Threat line shows if the UAV is in the process of making an evasive maneuver to avoid a threat (or collision). The magnitude of this number (0 to 100) shows the magnitude of the evasive maneuver it is involved in performing at the instant the target analysis is requested.

The Weapons line shows the relative weapon supply. The 100 value shown above indicates that 100% of the UAVs weapons are available.

The Diag/Fuel line represents the self diagnosis status of the UAV. In this demo, it is only showing the percent of fuel available.

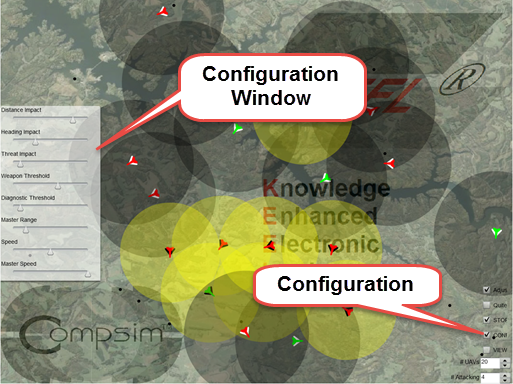

Each UAV is processing the self-evaluation when a new target is selected by the user. The KEEL "engines" processing this policy can be controlled externally. Judgment (the interpretation of policy) is accomplished by integrating the real-world values with the potential values. The potential values could be fixed at the factory, or as in this case, can be manipulated externally. In this demonstration, we allow the user to change how all of the UAVs interpret the policy by opening and manipulating a configuration window.

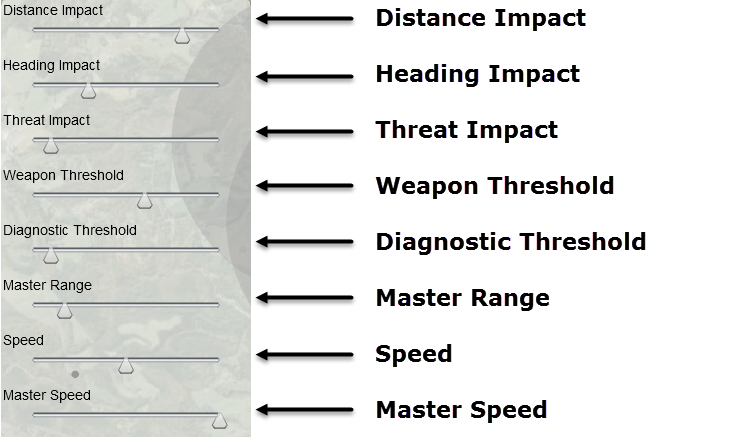

A CONF checkbox is used to open the Configuration window.

The image above shows the default policy configuration that is set when the demonstration is started. It should be noted that these configuration parameters are not considered independently by the KEEL engine. There is a complex, non-linear relationship between the distance between the UAV and the Target and the Orientation of the Target relative to the UAV Heading that can most easily be understood by viewing the KEEL dynamic graphical language. The bottom 2 configuration items (Master Range and Speed) are not directly part of the policy. The Master Range setting allows the user to modify the collision avoidance range so it is smaller than the other UAVs. This means that the "lead UAV" will not adjust its path as often as the other UAVs, allowing it to proceed in a straighter path to the target. It will "assume" that the other UAVs will use their own collision avoidance policy and be subservient to the leader. The Speed control is used to control the speed of all UAVs in the system.

The image below shows a screen shot of the KEEL source code that defines the policy for interpreting the information that is used in this demonstration. The "dynamic" nature of the source code makes it ideal for describing complex non-linear behavior because the user gets immediate feedback on how the information is interpreted.

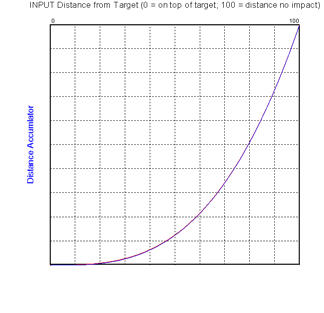

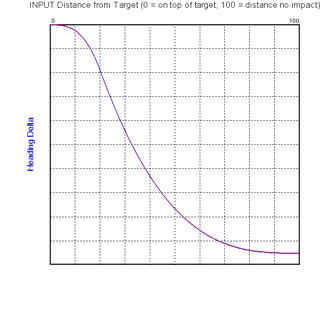

Using this language it is easy to define non-linear relationships for how information is to be integrated (fused). There are no complex mathematical formulas hidden behind the design. It is all there for easy manipulation. The following graphs show how distance and heading individually impact the analysis of which UAVs should participate in an attack of a particular target:

The first graph shows a blocking signal for distance. This means that the farther the UAV is from the target, the less value it has when calculating an overall value. When it is out of range, it will not be assigned. In this demo, all UAVs will be within range. The second curve shows the impact of heading when selecting the target. This graph is shows that when a UAV is close to the target, its heading plays a significant role in its evaluation. As it gets farther away, the impact of heading is decreased. Even at the maximum range, however, it will still have a small impact on the evaluation. Another curve will define how threat avoidance impacts the assignment decision. The policy (in this demonstration) suggests that if a UAV is actively involved in avoiding a collision (or incoming fire) it will be less valuable in assignment for a new target. This is not just a yes / no value, but a relative value. The more it is involved in an evasive maneuver, the more this will impact its availability for assignment. The Configuration window allows the "importance" of these individual interpretations is integrated into the final analysis. The KEEL engine integrates the values from the different curves to determine an overall value.

It should be apparent that describing how the UAV should interpret these variables using the English language would be very difficult and subject to human interpretation. With KEEL, one creates curves and observes how the UAVs respond in order to determine the most effective policies. The policies (curves) are tuned and refined without resorting to complex mathematics.

The user can interact with the demonstration by clicking on the map to identify new targets. Each click causes the UAVs to self-evaluate and determine who attacks that target. Multiple targets can be assigned. The available UAVs will determine who attacks.

When new targets are assigned, a single UAV with a red range identifier will selected. The remaining number of UAVs defined by the numeric stepper will be assigned and shown with a yellow range identifier. This assumes that there are available UAVs to respond to the new target. This will not be the case if their fuel or weapons drops below the thresholds set in the configuration screen or if they are all assigned to other targets.

The user can manually adjust the numeric stepper to determine manually how many UAVs will be assigned to the next target.

The user can monitor different UAVs by clicking on them on the map. If the View check box is checked, the information for that UAV will be displayed in the monitor window. At any time the user can click on the STOP check box and stop the action. While the action is paused, the user can select different UAVs and view their status in the monitor window. This may be helpful to view situations with multiple targets selected simultaneously and multiple UAVs accepting the assignment. Un-checking the STOP checkbox will resume the operation.

The user can adjust the Configuration settings to change how each of the UAVs performs its self assessment. These configuration inputs are used to drive the KEEL engine in the same manner as the real world inputs detected by each UAV. Because KEEL engines process all information (simultaneously / in parallel) during a "cognitive cycle", configuration inputs can be adjusted in real-time. This allows policies for UAVs to be adjusted in real-time as the circumstances dictate.

If the user clicks on the Stop checkbox, the UAVs will stop moving. When the simulation is stopped, the user can still adjust the numeric stepper to identify the number of UAVs that will be assigned to a new target. When the simulation is stopped, the user can click on the background to assign UAVs to the new target. With the simulation stopped, all previously assigned UAVs will be released and new ones assigned. Then the user can click on individual UAVs and see how they ranked in the selection process. Click on different places and seen how the UAVs will self assign. Adjust the configuration settings and observe how this impacts the policy for participation. When the Stop checkbox is selected, only one target can be selected at a time. Un-checking the Stop checkbox allows multiple targets to be simultaneously selected and attacked. When the Stop checkbox is "unchecked" a new number of UAVs will be assigned to each new target. When the Stop checkbox is "checked" the number of UAVs to be assigned will remain constant unless manually changed.

KEEL Technology allows complex adaptive behavior to be developed for, and executed in, autonomous devices. These behaviors are superior to those developed with static IF | THEN | ELSE logic. While it would be possible to develop the complex policies using differential calculus, this would not be cost effective. With KEEL Technology these complex behaviors (policies) can be developed in minutes, not weeks or years. And they can all be developed without requiring the services of mathematicians or software engineers. Using KEEL Technology domain experts can easily begin to integrate alternative behaviors exhibiting disinformation, redirection, and subterfuge as part of their goal-seeking techniques. If a human strategist can imagine the desired behavior, it can be packaged and deployed into autonomous devices. These devices can then operate with full independence, or their behavior (policies) can be modified while the UAVs are in operation; whatever the policy maker desires.

|

Copyright , Compsim LLC, All rights reserved |